Interpreting cosmological simulations: Spherinator and HiPSter

Responsible Partner: HITS

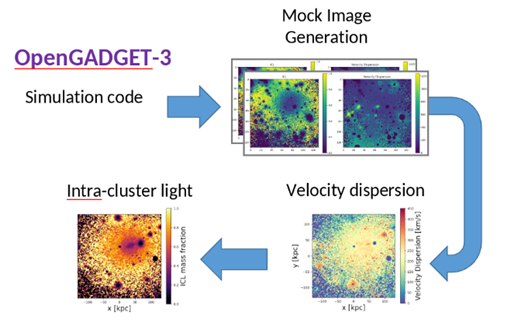

The data produced by Exascale cosmological simulations will not only be large (exceeding several PBs), but also deep: hundreds of billions of resolution elements represented by hundreds of features corresponding to physical properties of galaxies and large-scale structures in a representative region of the universe evolving across hundreds of snapshots. While many ML tools have been developed to help scientists analyze large observational datasets, solutions are lacking for interpreting cosmological simulation outputs.

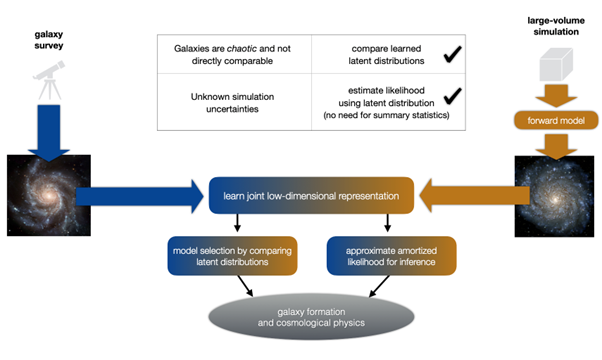

We have created the Spherinator and HiPSter tools (Polsterer et al 2024) to maximize the discovery potential of simulations by enabling scientists to explore and analyze the raw outputs produced by any cosmological code. Our tools use Representation Learning to automatically learn a compact projection of the simulated objects in a low-dimensional space that naturally describes their intrinsic characteristics. The data is seamlessly projected onto this latent space for interactive inspection, visual interpretation, sample selection, and local analysis.

Simulations alone do not lead to groundbreaking discoveries. Our tools are designed to bridge the gap between the complex model predictions produced by simulations, and observational data from large galaxy surveys. By seamlessly integrating the observed and simulated data into a single low-dimensional representation, these ML tools enable powerful simulation based inference and model selection techniques that maximally exploit the information contained in the raw data.

An interactive web demo using more than 60k synthetic images of simulated galaxies from the IllustrisTNG project can be found here.

Repo: github.com/HITS-AIN/Spherinator | github.com/HITS-AIN/HiPSter

Documentation: spherinator.readthedocs.io/en/latest/index.html

Multimedia material: video of demo: drive.google.com/file/d/1al4hJgt_sPSFu4q10bSrZaCcw_OVSrfs/view?usp=sharing

Publications: Polsterer K.L., Doser B., Fehlner A., Trujillo-Gomez S., 2024, arXiv, arXiv:2406.03810. doi:10.48550/arXiv.2406.03810